The wheel of technological innovation keeps spinning – and picking up speed. Recently, the digitization discourse has been negotiated under a new old term: Artificial intelligence. The discourse is often marked by fears: AI seems to cause ethical problems, to be a high-risk technology. The starting point for these concerns is always the autonomous weapons, the morally unsolvable dilemmas of autonomous driving or the (in the opinion of very few) imminent AI systems with consciousness and the apparently logically unavoidable urge to take control of the world. On the other hand, there is a very optimistic discourse that emphasizes the chances: prosperity can only be secured in the economic connection to technology, so one argument. Another insight is that we Europeans can only transfer our values into the age of artificial intelligence if we produce this technology ourselves and do not leave it to actors in the USA or China.

The current successes of AI research and implementation are certainly remarkable: In early November 2018, for example, the state-owned Chinese news agency Xinhua introduced a newsreader that is generated entirely by computer. According to media reports, the Xinhua agency stressed that the advantages of an AI newscaster are that it can work 24 hours a day without a break. A strange reason – such a system can work through a whole year and longer without a break.

Screenshot, Quelle: https://youtu.be/5iZuffHPDAw

Like digitalization, „artificial intelligence“ is now also an indeterminate term that is used in popular discourse to describe anything that frightens or inspires us when it comes to smart technology – depending on the perspective. In order to get to the bottom of this ethical matter, one has to take a closer look.

Often enough it has been stressed that the term artificial intelligence does not fit at all. Intelligence cannot be artificial because consciousness is necessary for this, so the argument goes. An alternative term is machine intelligence, which indicates that with this intelligence we are dealing with something that is categorically different from what we assign to man. However, machine intelligence is a category that is defined in comparison to human abilities. We have certain difficulties in determining exactly what human intelligence is, but we know roughly what constitutes intelligent human behavior. In our everyday life, we infer the intelligence of human beings by judging their behaviour. In this sense, we can judge the behaviour of a machine as intelligent if the machine can successfully simulate some or even many important elements of human intelligence.

Systems controlled by algorithms are now regarded as intelligent if they can interpret data correctly, learn from data and use this learning success to accomplish specific goals and tasks. One speaks of „weak AI“ when an AI system is oriented towards concretely determinable abilities and depicts these, such as learning to play Go and beating Grandmasters. On the other hand, one would speak of „strong AI“ if the intelligent system, in addition to individual abilities, also possesses (self-)awareness, for example, and can define and pursue completely independently new goals for itself, i.e. it can also decide to learn and practice composing in addition to Go games. It is highly controversial whether and when it will be possible to develop systems with „strong AIs“. – So, we are currently dealing with simulations of intelligent behavior by machines. Machines independently recognize patterns, adapt their „behavior“ and can make „decisions“ based on self-controlled analysis of data. The quotation marks are important: For example, we should not assume decision-making ability for machines, because responsibility and evaluation of the consequences of a decision according to social norms and moral values is something we should reserve for people.

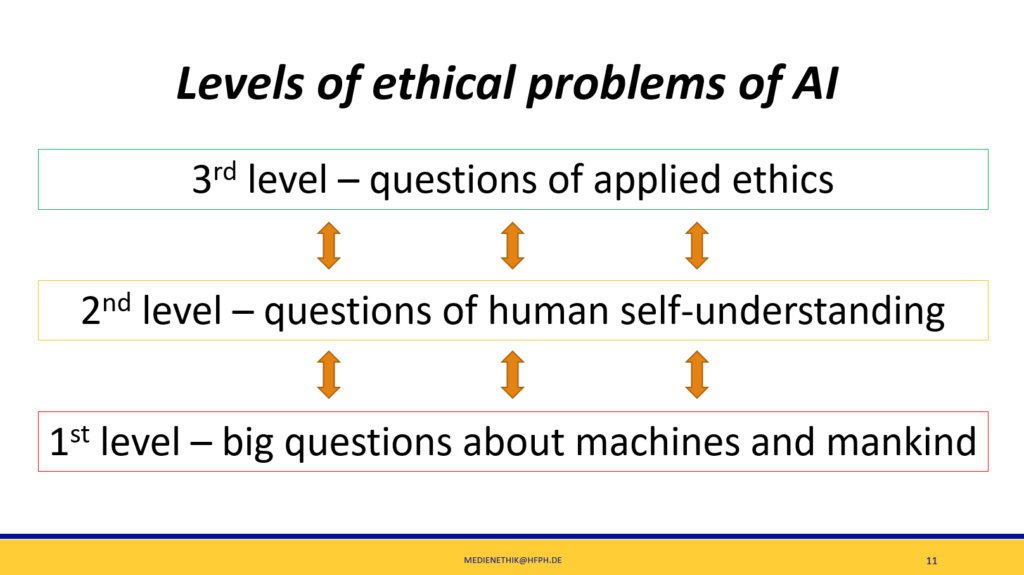

From here, the ethical problems of AI can already be encircled as a further stage of digitisation. On a first level, the big questions about awareness in the context of the „strong AI“ are particularly interesting. Is a computer consciousness conceivable and what consequences does this have for the concept of human consciousness? What is thinking and can other beings than humans do the same? And if so: Can or must specific rights be granted to such systems? Isn’t a comprehensive superintelligence basically godlike? – Many of these questions have their roots in the philosophy of the mind, in neuroscience, in theology, or in all three. Anyone who assumes that thinking has purely material foundations (our biological brain functions) tends to assume that consciousness can also be realized in machines. At this point, current technological developments are forcing us to rethink what man is, what constitutes him, whether he has a soul and what it should be.

A second level deals with questions of human self-understanding. This raises the question of how an AI environment (with AI newscasters, computer assistants that react to speech, and completely autonomous vehicles) influences people’s thinking about themselves. What applies to „human rationality“ when machines can make better decisions than humans, for example in skin cancer diagnoses? What is guilt and responsibility when autonomous systems cause accidents? What does a human action count when robots can act more precisely and tirelessly? What is lost when robots care for our elderly? We become new people in a way through the technology we have at our disposal. Digital technologies, including AI systems, enable us to acquire new knowledge, to live different lives (mobility) or to live longer. We do not become completely different people through new technology, but we can and will perceive ourselves anew. This can change a lot.

Finally, the third level deals with concrete questions of the use and regulation of corresponding products, but also informational self-determination, data protection and the promotion of research and business are relevant topics here. These questions of applied ethics are as diverse as the fields of application of AI. The three outlined levels must be considered together. Anthropological questions are related to deeper considerations on the status of consciousness and thinking, practical questions to anthropological considerations. The moral aspects in all these areas, especially in the applied dimension, are extremely diverse. And they are important because today we need answers to the question of what to do. Because the fact that we are „only“ dealing with weak AI does not mean that we do not have strong ethical questions to deal with. But these are very concrete and already a topic today. All of this is about redefining or redefining what is specifically human. We will not only be able to answer these questions with technical cutlery, but also and above all based on our experiences through community, music, dance, religion, love, art and human encounters.

First published in Opus Kulturmagazin 2019, https://www.opus-kulturmagazin.de/

Citing this article: Filipović, A. (2019, May 3). Ethics, Digitisation and Machine Intelligence. Unbeliebigkeitsraum. Retrieved from https://www.unbeliebigkeitsraum.de/2019/05/03/ethics,-digitisation-and-machine-intelligence/